I believe the OAuth 2.0 definition of the “client” is too restrictive, and by doing so it has effectively closed-off any possibility of OAuth 2.0 entertaining true third party access on the Internet. Although OAuth speaks in terms Alice-to-Bob sharing of resources, in reality it caters only as far as Alice-to-client sharing (where the “client” is a piece of application software possibly operated by a third party). This point jumps-out clearly when we compare the OAuth view of Alice-to-Bob sharing against the UMA view.

The definition of “client” in OAuth 2.0 (RFC6749) is as follows:

An application making protected resource requests on behalf of the resource owner and with its authorization.

The UMA (draft-06) definition of the “client” is as follows:

An application making protected resource requests with the resource owner’s authorization and on the requesting party’s behalf.

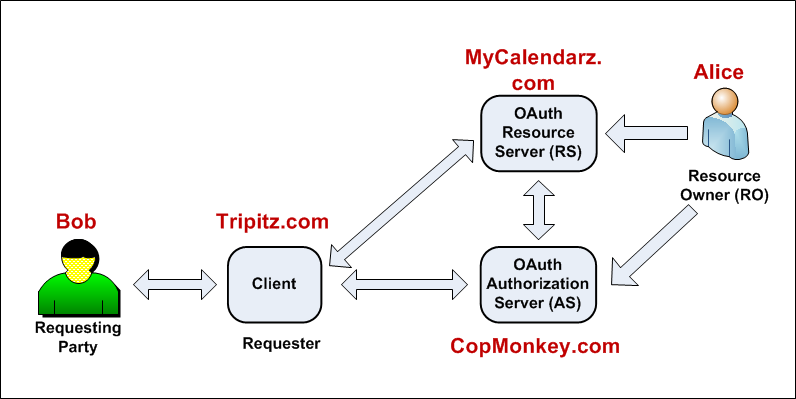

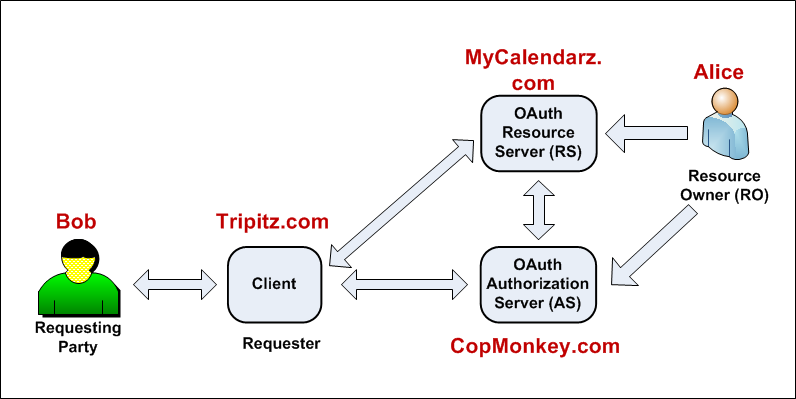

UMA makes the clear distinction between the “Requesting Party” and the Client (or the “Requester”). The Requesting Party is considered to be the human being (or organization, or a human legally representing an organization), while the Client in UMA is the “proxy” entity through which the Requesting Party accesses the resources hosted at the Resource Server. In the UMA view, Bob is the human person who is using the client but may not be in full control of all aspects of the client’s operation.

Nat Sakimura (from the OpenID Foundation) in his recent blog corrects the common misconception that “many people seem to think that this client as “Alice” the resource owner.” I absolutely agree with this view. However, in order to truly support a realistic Alice-to-Bob sharing, OAuth 2.0 needs to expand its definition and understanding of the client.

The following diagram illustrates further. In this diagram, Alice is wanting to let Bob access her calendar so that Bob could adjust his travel itinerary to match Alice’s itinerary. Alice is the owner of her resource (her calendar file) which resides at the Resource Server (operated by MyCalendardz.com). Bob is using the client (the application operated by Tripitz.com) in his desire to access Alice’s calendar file at the MyCalendarz.com. The client is therefore acting on behalf of Bob.

The OAuth 2.0 definition of “client” fails to recognize that a (legal) relationship may exist between the human person (Bob who is driving the “client” application at Tripitz.com) and the company called Tripitz.com. Thus, in the Alice-to-Bob sharing, OAuth assumes that Bob is directly accessing the resources, whereas in reality Bob is more likely to be using his browser to “remotely manipulate” the client application (being operated by a third-party Tripitz.com) to access Alice’s resources at the Resource Server (MyCalendarz.com). The UMA architecture recognizes the real-world reality that Bob will likely need to have an account at Tripitz.com, in which Bob will be required to accept the Terms of Service (TOS) of Tripitz.com.

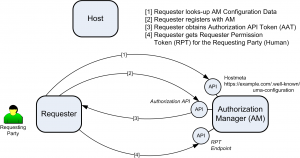

UMA recognizes (i) the Bob-to-Tripitz relationship and (ii) the Tripitz-to-CopMonkey relationship by requiring two (2) types of OAuth tokens to be presented/wielded by the client:

- The Authorization API Token (AAT): this is the OAuth token that belongs to the client (TripItz.com) and which authenticates the client to the Authentication Server.

- The Requesting Party Token (RPT): this is the OAuth token that belongs to the Requesting Party (Bob) and which authenticates Bob to both the Authentication Server and the Resource Server.

This distinction between the Requester and the Requesting Party in UMA allows legal agreements (i.e. trust framework) to recognize Bob as distinct from Tripitz.com, and accord different legal obligations to these two entities. And from a risk management perspective, it allows finer grain analysis and risk assignments to these entities.

In summary, in order to address the true Alice-to-Bob sharing of resources, OAuth 2.0 needs to:

- expand its understanding of “client” to mean an application being owned and operated by a third party (not Bob).

- add another player to the ecosystem, namely Bob the Requesting Party.

- define that the client is acting on behalf of Bob.